Designed for flexibility and scalability, the GoForMet-COMET is an Integrated Files & Messages Switching System (IFMSS). It can be delivered as a stand-alone GTS /AFTN data switcher compliant with all relevant WMO / ICAO standards or as a simple data communication module of any other G4M system.

GoForMet-COMET

G4M-COMET is designed for flexibility and scalability, using NodeJS Cluster technology on top of an ExpressJS server. Node.js cluster module provides the ability for scaling up the applications by splitting a single process into multiple processes or workers, in Node.js terminology. To take advantage of multi-core systems, the cluster module allows you to create child processes (workers), which share all the server ports with the main Node process (master). The worker processes are spawned using the fork method to communicate with the parent via IPC and pass server handles back and forth. Because workers are all separate processes, they can be killed or re-spawned depending on a program's needs without affecting other workers. As long as there are some workers still alive, the server will continue to accept connections. If no workers are alive, existing connections will be dropped, and new connections will be refused.

Nowadays, environmental data is available from many sources in various formats, and there is a real need to collect, store, and analyze all the data in the system. Either coming from similar agencies or partner institutions, downloaded from various sites over the world, or even being part of crowdsourced data collection, all these data need normalization before being processed into the system. Normalization (or cleaning the data) is the process that:

- re-organize the data to appear similar across all records and fields,

- eliminate redundancy (duplicates)

To get standardized information that can enter further in the next data pre-processing module: Data Quality control. After receiving their quality coefficients and relevant metadata being created, data are sent to The Storage System. The Archives Manager is the module responsible for data policy application in this process.

Once data is received (pushed or pulled) by one of COMET circuits, data are pushed in a Processing Queue to be consumed (analyzed, normalized, QC, and stored) by one of the Processing Workers available. For efficiency, by default, a COMET cluster will launch several Processing Workers equal to the number of cores available on the system hardware. It can be configured differently. The above-explained functionality is graphically represented in the COMET-functional diagram.

Main functionalities

Data ingestion and processing of almost all kinds of standard formats and meteorological-specific data:

- all GTS / WIS standard formats (including BUFR, SYNOP, TEMP, PILOT, GRIB, HDF5, SIGMET)

- all AFTN standard formats (including METAR, TAF, etc.)

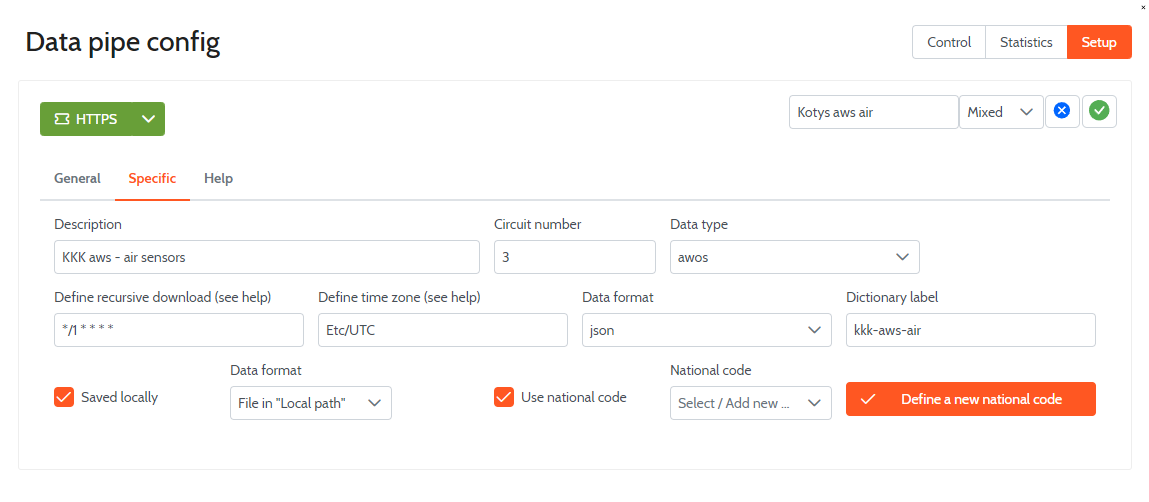

- data from Automatic Weather Observation Systems (AWOS)

- NetCDF, GeoTiff, GeoPDF, XML, JSON, CSV

- Data switching (transmission on certain channels according to a set of predefined rules)

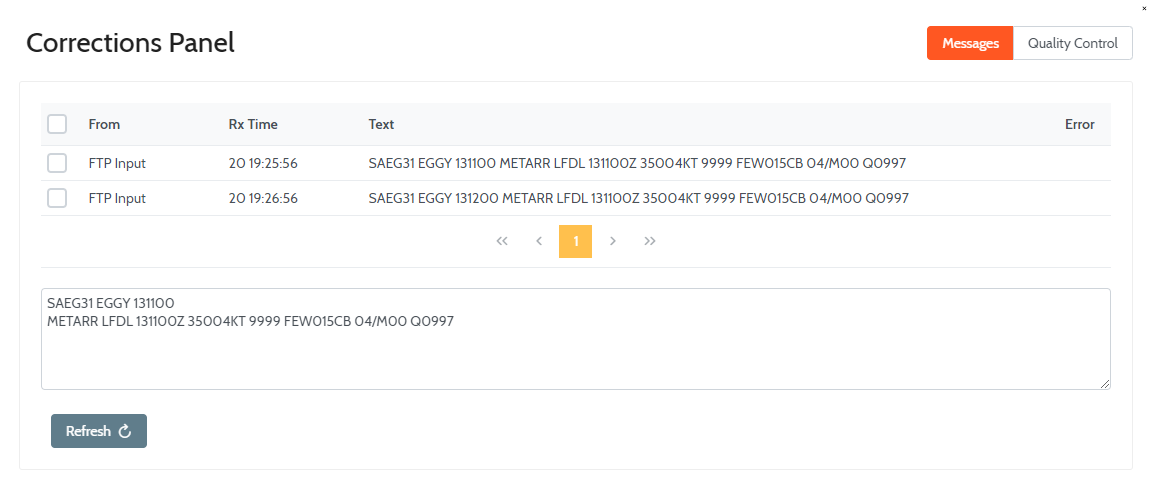

- Data normalization

- Data Quality Control (QC) - level 1

- Data processing

- Data storage

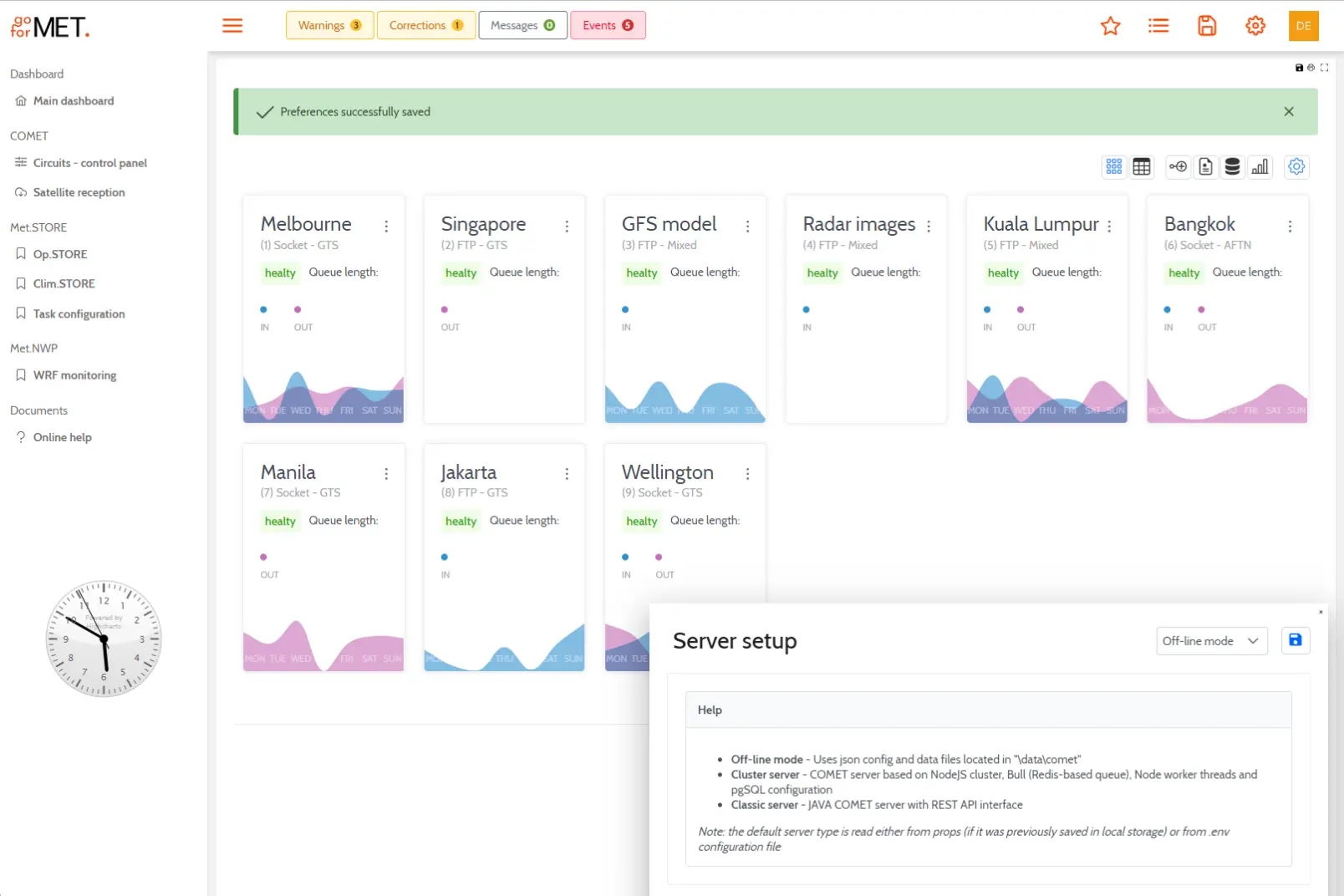

The COMET Graphical User Interface

Part of the Web-Screens module, the COMET GUI has the following functionalities:

- User Authentication, authorization, and management

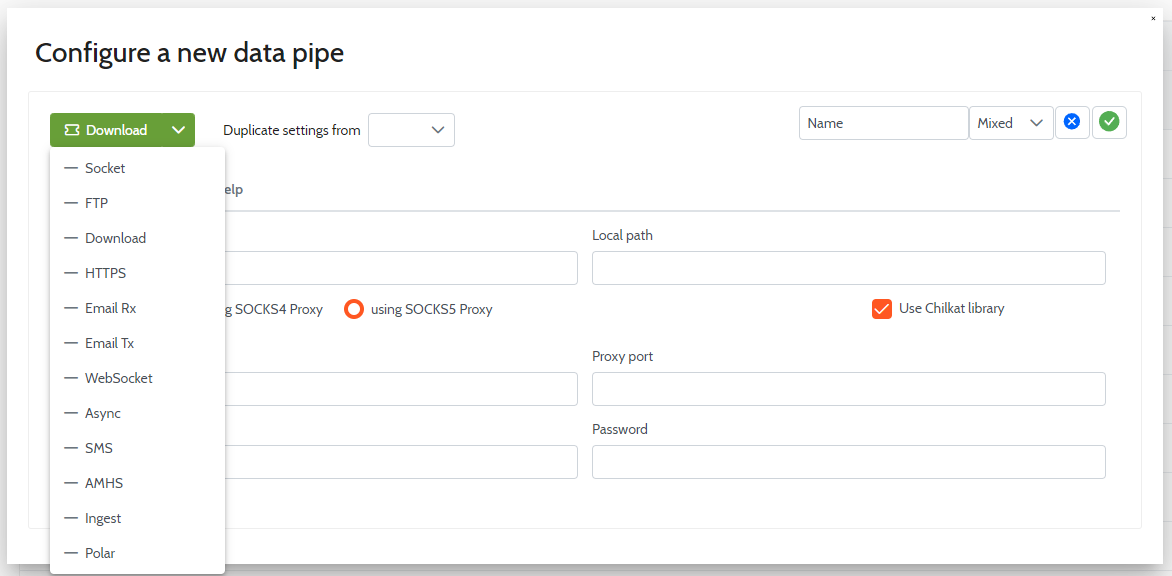

- Configure the circuits for incoming data using a graphical panel

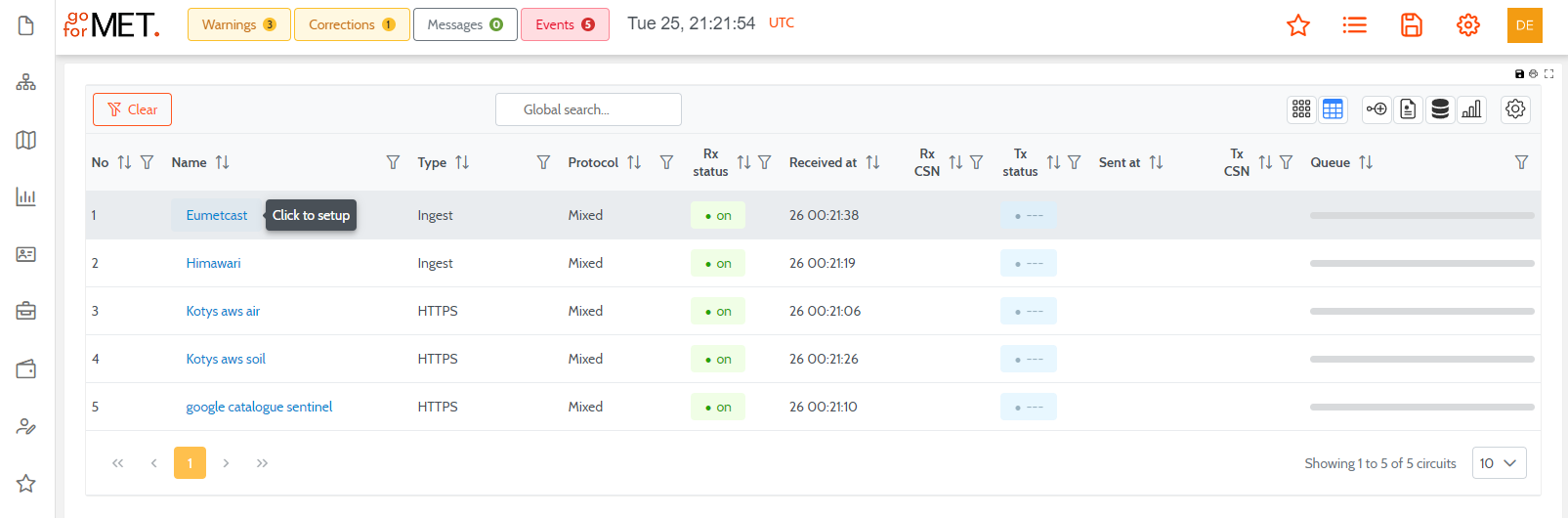

- Monitor the activity of the circuits in real time

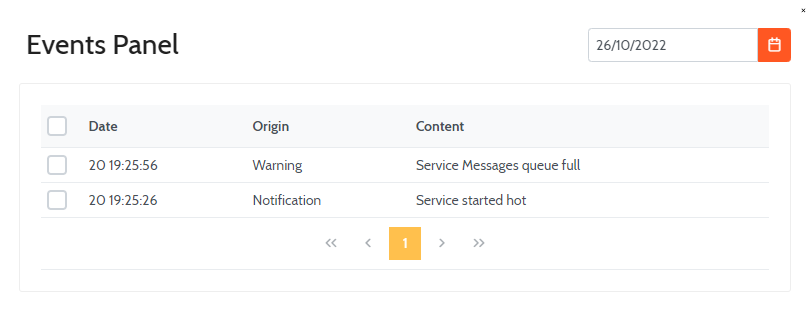

- Active alerts and notification modules are permanently active on top of all the other modules currently in use

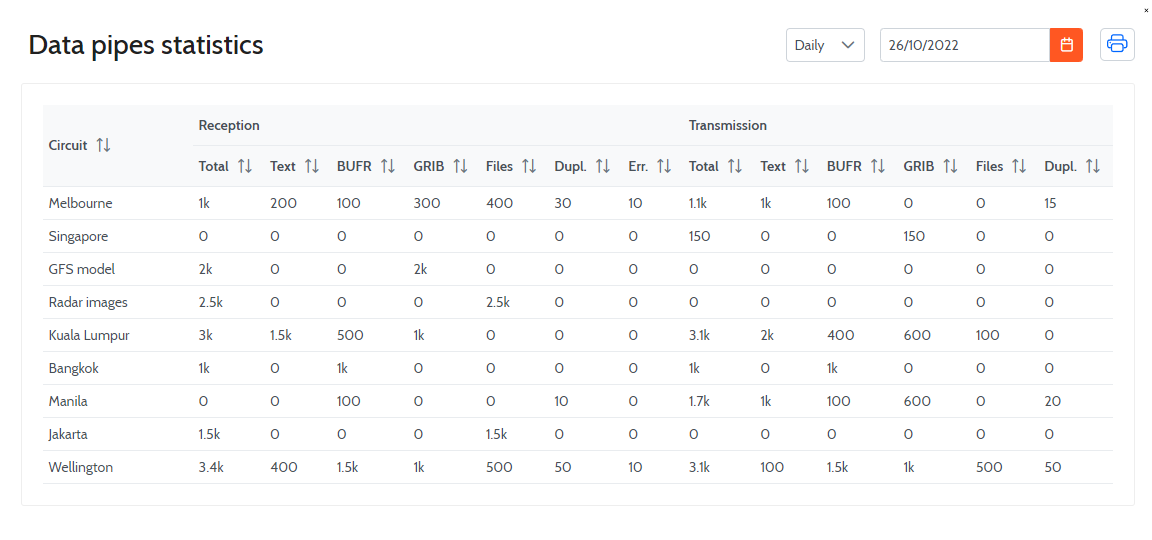

- Display statistics and logs about the incoming and processed traffic

- Fully statistics and logs are available for GTS channels according to WMO regulations.

- A Standard third-party library is implemented for general monitoring and logging of all components of the system.

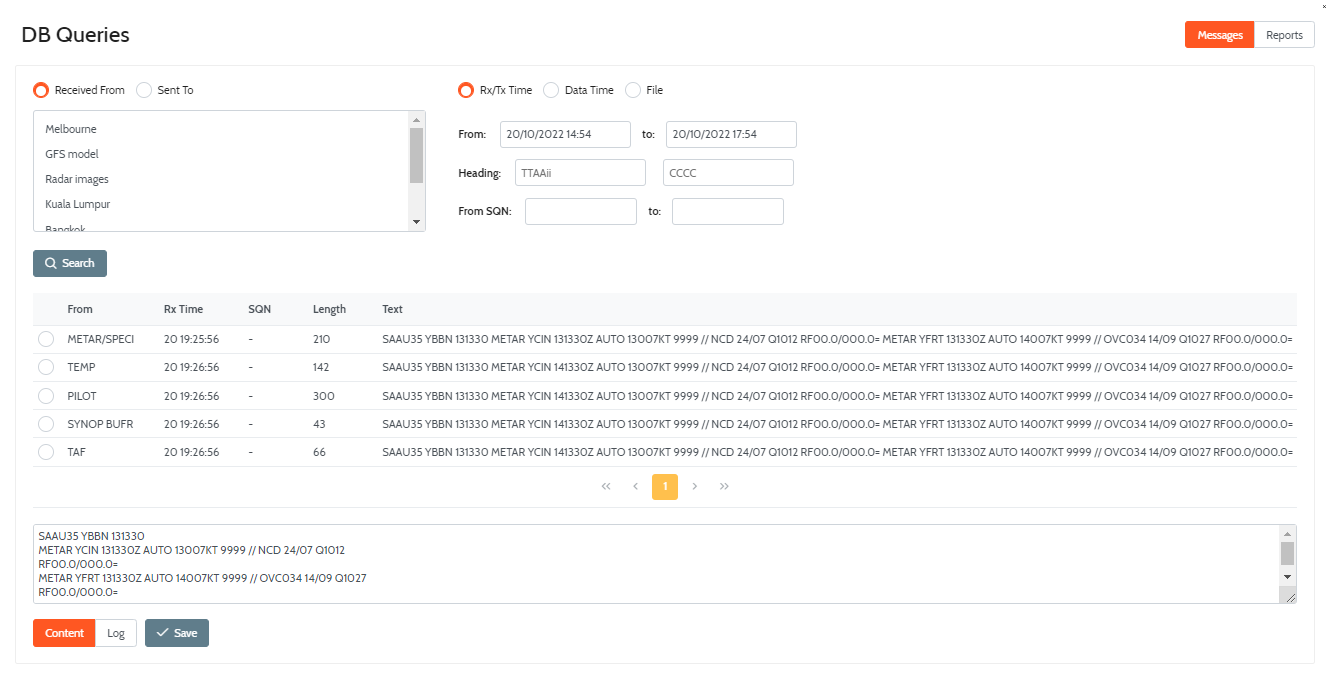

- The GUI allows interrogations of Operational DataBase (ODB) for both data and metadata.